NVIDIA X-Mobility and Its Application in Quadruped Robots

NVIDIA X-Mobility and Its Application in Quadruped Robots

InItroduction

In recent years, quadruped robots have gained significant attention in smart manufacturing, security inspection, and scientific research and education. Compared with wheeled or tracked robots, their main advantage lies in their ability to flexibly adapt to varying terrains. However, enabling quadruped robots to truly operate in diverse and complex environments has remained a persistent challenge in the industry. Against this backdrop, NVIDIA’s release of the X-Mobility model provides a promising breakthrough. By integrating this model into our quadruped robot platform and combining it with our in-house system integration expertise, we have achieved enhanced mobility intelligence.

What is NVIDIA X-Mobility?

NVIDIA’s X-Mobility (End-to-End Generalizable Navigation via World Modeling), released on Hugging Face, is an end-to-end model designed for navigation and motion control. Its primary objective is to enable robots to maintain stable and generalizable mobility across diverse environments.

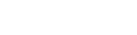

Figure 1. Semantic segmentation of obstacles within a custom-designed virtual environment.

Technical Features

- Input: RGB images, robot states (e.g., velocity, pose), and optional path information.

- Output: Action commands (linear velocity, angular velocity).

- Core Architecture:

-

- Vision Transformer for extracting high-level visual features.

- State estimation network with recurrent modules for handling temporal sequences and non-Markovian dependencies.

- Multi-task learning (image reconstruction + semantic segmentation) to enable more robust latent state representation.

- Deployment Platform: Compatible with TensorRT, deployable on NVIDIA Jetson and other GPUs, supporting real-time inference.

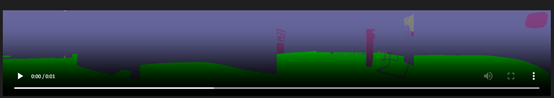

Fig. 2. Semantic fitting during training, where green denotes navigable regions.

Why We Chose X-Mobility

In the past, quadruped robots primarily relied on predefined gaits and traditional controllers (such as MPC or PID). While this approach proved reliable in structured environments, it often performed poorly in dynamic and unknown environments.

X-Mobility offers several key advantages:

- End-to-End Learning: Eliminates the need for manually designed complex controllers, as the model can directly output control commands from perception.

- Generalization Capability: After being trained in environments such as warehouses, corridors, and narrow passages, the model can be transferred to similar real-world scenarios.

- GPU Acceleration: Leveraging NVIDIA hardware and TensorRT, the model can maintain smooth performance in latency-sensitive scenarios such as dynamic obstacle avoidance.

Our Integration & Experiments

We deployed X-Mobility on our quadruped robot platform and conducted multi-scenario testing:

- Hardware Integration:

-

- Quadruped robot equipped with NVIDIA Jetson Orin.

- Sensors: Intel RealSense, IMU, Odometer.

- Software Integration:

-

- ROS2-based control framework.

- TensorRT-optimized inference, reducing latency to the millisecond scale.

- Experimental Scenarios:

-

- Factory corridor in simulation: The robot successfully identified obstacles in narrow passages and located alternative side paths for traversal.

Fig. 3. Semantic segmentation of corridor images performed by the model, where green denotes navigable regions.

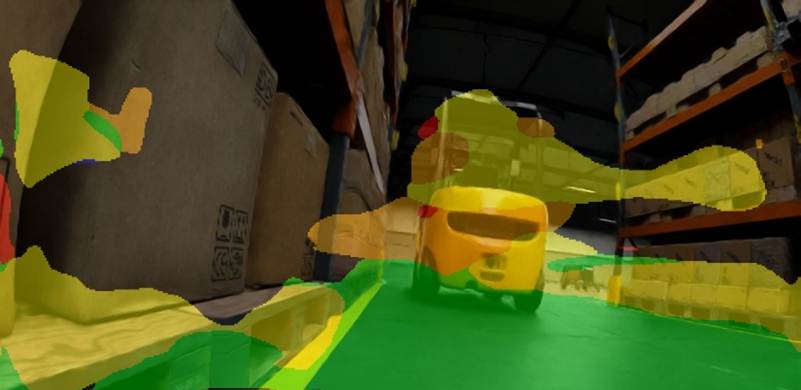

Real-world office scenario: The robot is capable of planning paths in an office environment and autonomously avoiding desks, chairs, and pedestrians.

Fig. 4. Obstacle avoidance test performed in a real-world environment.

Looking Ahead

To further enhance this integration, we will also leverage NVIDIA’s ReMEmbR. ReMEmbR allows robots to retrieve, store, and utilize past experiences in real-time. By combining ReMEmbR with X-Mobility and VLMs, quadruped robots will be able to not only understand natural language commands but also recall relevant contextual knowledge and past navigation experiences. This capability is essential for robust long-term autonomy in dynamic industrial environments.

In the long term, this convergence of X-Mobility, VLMs, and ReMEmbR will drive the future of smart factories, AI-powered robotics, and automated logistics—positioning quadruped robots as true working partners on the factory floor.

Conclusion

The adoption of NVIDIA X-Mobility has transformed our quadruped robot from a “demonstration platform” into a productive tool. This represents not only a technological breakthrough but also an important milestone in advancing intelligent manufacturing to its next stage.

Nvidia blog link: https://developer.nvidia.com/blog/streamline-robot-learning-with-whole-body-control-and-enhanced-teleoperation-in-nvidia-isaac-lab-2-3/