Foxlink x NVIDIA From Simulation to Real-World Deployment: Building End-to-End AI Robotics

Foxlink x NVIDIA From Simulation to Real-World Deployment: Building End-to-End AI Robotics

At Foxlink, we are not just building robots—we are creating a comprehensive ecosystem for intelligent autonomy. With NVIDIA’s full-stack robotics platform, we have seamlessly integrated autonomous patrolling, dexterous manipulation, and smart manufacturing to bring AI to life across real-world industrial applications.

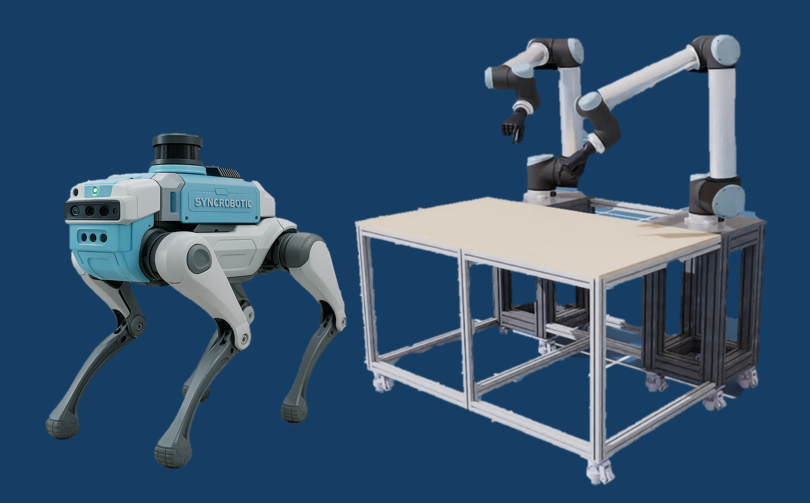

Our development framework is built on upon NVIDIA Isaac Sim, NVIDIA Jetson AGX, NVIDIA Omniverse, TensorRT, and NVIDIA DGX, enabling rapid iteration from simulation to training and final deployment.

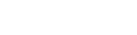

Our primary platforms focus on two domains:

- Foxlink Jeff: An autonomous quadruped patrolling robot

A dual-arm manipulation system with vision-language understanding powered by NVIDIA Isaac GR00T Dexterity workflow.

Foxlink Jeff: AI-Powered Quadruped with Perception and Mobility

Foxlink Jeff is an all-terrain AI robotic dog designed for patrolling, industrial inspection, and smart infrastructure integration. Built on the NVIDIA platform, Jeff executes real-world perception, decision-making, and autonomous actions. Jeff is not just mobile—it sees, thinks, adapts, and acts as a next-generation AI patrol agent.

Sim2Real Training Workflow: From Isaac Lab to Field Deployment

We designed a complete reinforcement learning training loop using NVIDIA Isaac Lab, an open source, modular robot learning framework, enabling behavioral policies to be learned in simulation and transferred seamlessly to the NVIDIA Jetson AGX platform for real-time inferencing and control.

Simulation ➜ Policy Learning ➜ DGX Training ➜ AGX Deployment ➜ Real-World Execution

Foxlink has also built a three-tier integration framework between simulation and real-world operation:

- NVIDIA COSMOS World Foundation Models on DGXgenerates new data to simulate environments and trains perception modules

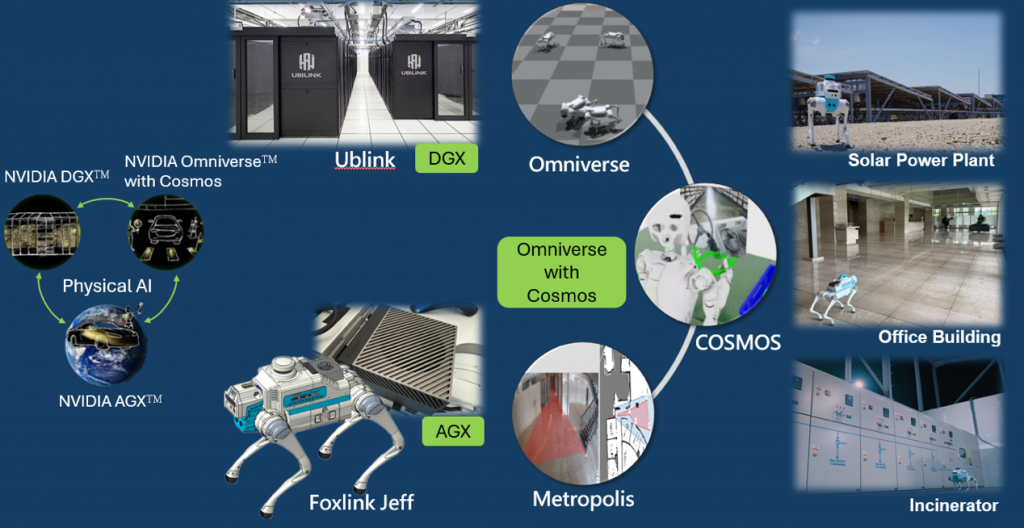

- NVIDIA Metropolisprovides live monitoring, camera integration, and floor plan calibration

- Foxlink Jeffexecutes field tasks and receives real-time sensor fusion updates

Via the Metropolis interface, users can:

- Define patrol maps and paths

- Assign mission routes

- View live camera feeds

- Remotely issue behavior commands

The result: a fully remotely operable autonomous patrol system.

We use NVIDIA GR00T as our intelligent dual-arm robotic system, integrating computer vision and language understanding.

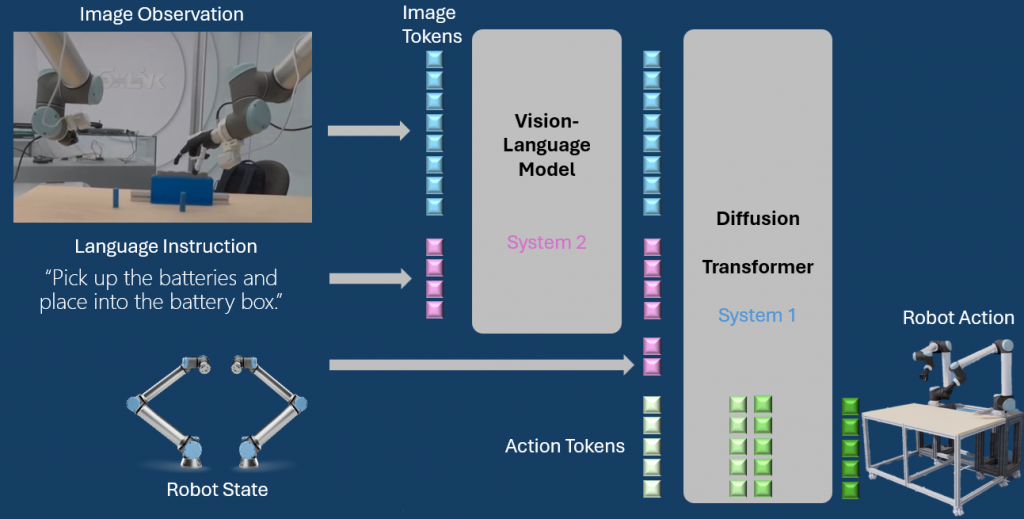

We use NVIDIA GR00T N1 robot foundation model as our intelligent dual-arm robotic system, integrating computer vision and language understanding.

Core components include:

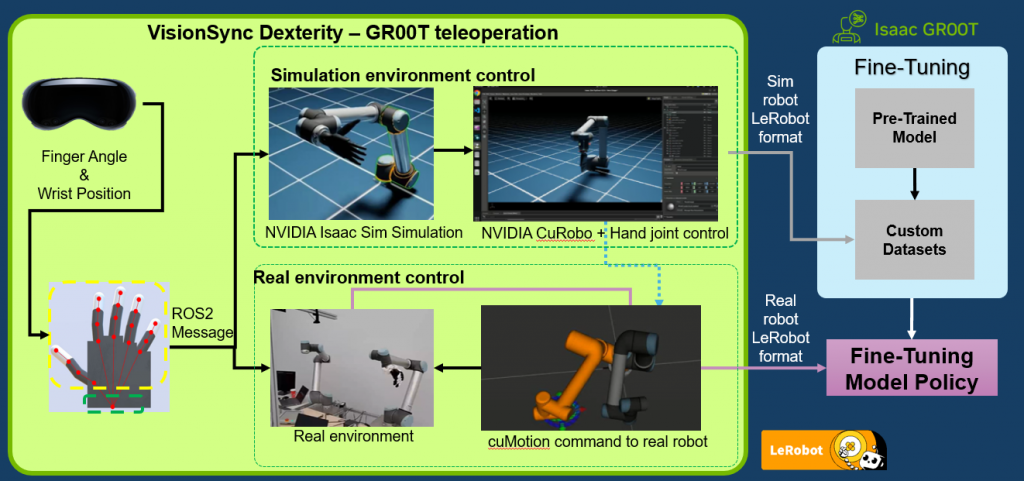

- VisionSync glove + Isaac Sim, a reference robotic simulation application, for real-time teleoperation

- Use GR00T N1 Pre-trained model for VLA two-handed operation application

- Pre-training is conducted using two visual inputs from the robotic arm, two additional visual inputs from the robot’s XZ and YZ perspectives, and all joint angle data from both the robotic arm and the dexterous hand.

- cuMotion+ LeRobot protocolfor fine-grained motion control

Data workflow integrates VisionSync teleoperation with fine-tuning pipelines, enabling a flexible and adaptive architecture. This system continuously improves through learning-by-demonstration and policy reinforcement.

- Operators wear Apple Vision Pro to track finger angles and wrist positions

- Data is transmitted to the virtual arms in Isaac Sim via ROS 2.Data is transmitted via ROS2 messages to Isaac Sim virtual arms

- NVIDIA cuMotion controls joint trajectories in both simulation and physical robots

- The robot mimics operator movement in real time, executing grasp and manipulation tasks

- All actions are recorded in LeRobot format as training datasets

- Fine-tuning is performed on DGX, and optimized models are deployed back to hardware

Example task:

Command: “Pick up the batteries and place them into the battery box.”

The post-trained GR00T N model interprets the instruction, parses visual inputs, plans the path, and executes the grasping action.

Our data pipeline enables zero-shot learning, data-efficient training, and robust generalization across tasks.

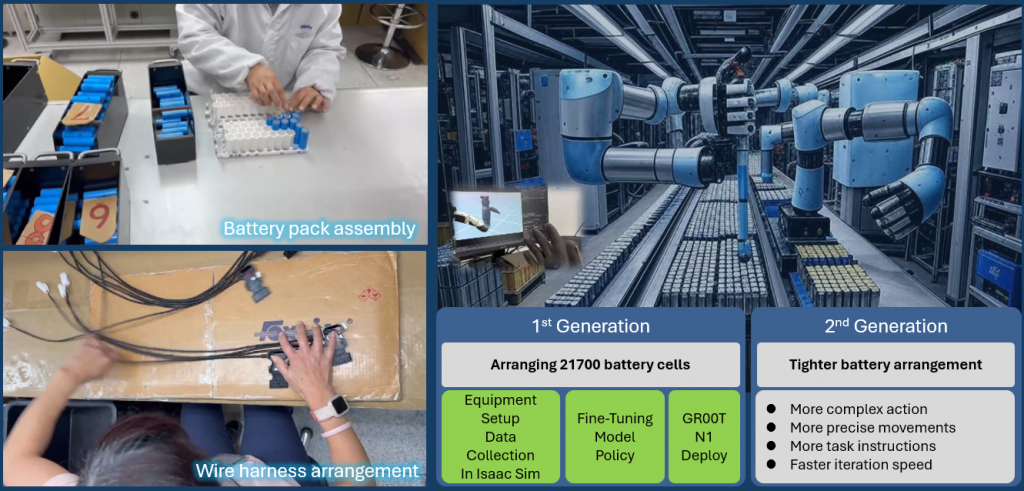

Real Deployment in Foxlink Factory

Deployed use cases include:

- Automatic arrangement of 21700 lithium battery cells

- Wire harness sorting and connection

- Multi-task, low-volume, high-variation production scenarios with rapid adaptation

What’s Next

We are actively integrating the following advancements:

- Multimodal sensing (RGB, thermal, LiDAR, audio)

- Collaboration between quadrupeds and manipulators

- Reinforcement learning with feedback-driven adaptation

- Hybrid cloud-edge deployment

- Mixed-reality interfaces for human-robot interaction (operation + annotation)

Final Thoughts Redefining Real AI Robotics

At Foxlink, our mission is not just to get robots moving—but to empower them to understand the world, learn from change, and adapt intelligently.

By leveraging the full NVIDIA AI robotics stack, we are redefining how machines see, think, and act in real-world environments.

Join us on our journey to build the next generation of truly autonomous AI robots.